Media

Facebook shifts fake news onus on readers

Instead of taking responsibility the social media giant asks users to investigate fake news

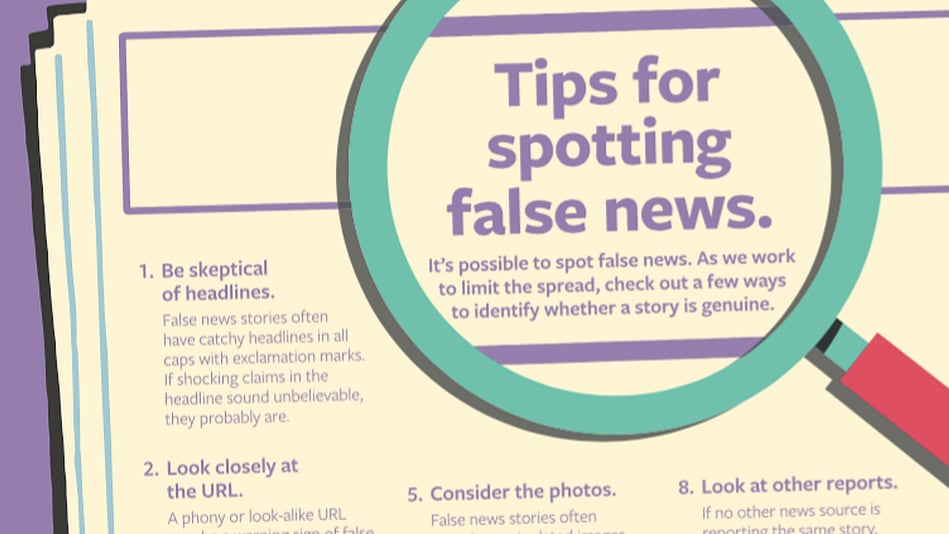

Facebook wants us to know that it is fighting fake news in the sub-continent. India is Facebook’s and Whatsapp’s biggest consumer base with 241 million users and 200 million users, respectively. And what does it do? It releases full page ads in the Deccan Herald, The Indian Express and The Telegraph with 10-tip advisory to identify fake news.

Seen in the backdrop of EU regulators threatening action against the digital giant if it does not remove “illegal content inciting hatred, violence and terrorism online” following ProPublica expose, it would appear that Facebook, at least in India, is passing the buck to the consumer. Mark Zuckerberg’s company is also facing the heat from the US Congress, which had asked him to turn over more than 3,000 politically-themed advertisements that were bought by suspected Russian operatives to influence US voters.

The advertisements published in Indian media beg a question — is Facebook passing on the responsibility to the readers and making them investigators in turn? The social media giant, instead of setting vetting standards and harsher guidelines for creators of fake news, is pointing a finger at the gullibile human nature and making users responsible for their newsfeed, which is controlled by Facebook algorithms. With the use of the words ‘spotting’ and ‘limit’ in their advertisement and not ‘identifying’ and ‘stop’, they make the process seem like a fun game; a trivialisation of the issue. A refusal to take responsibility for the menance.

According to a 1993 study done by psychologist Daniel Gilbert, it was found that without time for reflection, people simply believe what they read. The study looked at the French philosopher René Descartes argument that understanding and believing are two separate processes and the Dutch philosopher Baruch Spinoza opinion that the act of understanding information

was to first believe it. We may change our minds afterwards, he said, when we come across contrary evidence, but until then we believe everything. This is the era of information overload and we don’t necessarily pause to think about every news item as we simply scroll.

What does this mean? This means that whatever the Facebook algorithm throws up on our news feed, we are likely to believe unless proved otherwise. So, shouldn’t the accountability for fake news lie with platform? They, after all, tailor news feeds so that certain people are more likely to see certain content than others.

Facebook would definitely like to do less because if they created improved algorithms to weed out fake news before it was shared multiple times, it would mean that there would be more centrist views popping on news feeds. This is definitely less addictive. This could mean that people would spend less time on Facebook and thereby generate less revenue. Being a profit-making agency, less money is not in their interest. So, what do they do — pretending to be concerned — they subtly shift the responsibility to the reader.

Published: undefined

Follow us on: Facebook, Twitter, Google News, Instagram

Join our official telegram channel (@nationalherald) and stay updated with the latest headlines

Published: undefined